GPU

Execution Building Blocks

-

GPUs Explained - Branch Education .

-

Cool.

-

-

From smallest to largest.

Thread

-

Has its own registers and private variables.

Warp / Wave

-

Fixed-size group of threads executed together in lockstep.

-

These are hardware scheduling units — the smallest batch of threads that can be executed together.

-

The SM/CU scheduler runs one warp/wave at a time on its execution units.

-

The SM does not literally execute a whole warp in a single cycle always; the SM issues instructions for a warp and can interleave instructions from multiple warps to hide latency.

-

The warp is the smallest scheduling/issue granularity, but instruction dispatch and active lanes depend on pipeline and issue width.

-

Reconvergence is implemented by hardware (and compiler) mechanisms; divergent threads are masked and the SM executes each path serially until reconvergence.

-

-

Wavefront (AMD) or Warp (NVIDIA).

-

Common sizes:

-

NVIDIA warp: 32 threads.

-

AMD Wavefront: 64 threads.

-

-

These threads share a program counter and execute the same instruction at the same time (SIMT model).

-

If threads diverge in control flow, the hardware masks off threads not taking the current branch until they reconverge.

Workgroup (API abstraction)

-

Defined by you in Vulkan’s compute shader or GLSL/HLSL.

-

Group of threads that can share shared memory within a single SM/CU.

-

A workgroup is scheduled to a single SM/CU for its lifetime while active, but a workgroup may be split across multiple SMs over time if the runtime re-schedules (e.g., after preemption or context switch).

-

Practically, code semantics assume the workgroup runs on a single SM until completion.

-

-

Size: arbitrary (within hardware limits), e.g.,

local_size_x = 256. -

Purpose: group of threads that:

-

Can share shared memory / LDS .

-

Can synchronize using

barrier()calls.

-

-

Hardware: The entire workgroup runs within one SM/CU (so they can share its on-chip memory).

-

Example:

-

If you set

local_size_x = 256, that’s 256 threads in the workgroup.

-

-

Subgroup (API Abstraction)

-

Subset of threads in a workgroup that maps to a warp/wave (e.g., 32 threads).

-

Enables warp-level operations (shuffles, reductions) without shared memory .

-

Exposed in Vulkan/OpenCL; size is queried at runtime.

-

SM (Streaming Multiprocessor) / CU (Compute Unit)

-

SM = Streaming Multiprocessor (NVIDIA terminology).

-

CU = Compute Unit (AMD terminology).

-

Hardware block that runs multiple warps/waves.

-

This is the fundamental hardware block that executes shader threads.

-

Has per-SM caches and shared memory.

-

Registers (not shared between SMs).

-

Shared memory (LDS).

-

L1 cache (per-SM).

-

Implication: If one SM’s L1 cache is filled with certain data, another SM won’t see it — coherence happens at L2.

-

-

Resource Partitioning :

-

Fixed registers/thread (e.g., 255 regs/thread on NVIDIA Ampere).

-

Shared memory configurable (e.g., 64–164 KB on NVIDIA).

-

-

Concurrent Execution :

-

Runs multiple warps/waves simultaneously (e.g., 64 warps/SM on NVIDIA).

-

Hides latency via zero-cost warp switching.

-

-

Each SM/CU has :

-

Its own set of registers (private to threads assigned to it).

-

Its own shared memory / LDS (Local Data Store), accessible to all threads in a workgroup.

-

Access to L1 cache and special-function units (SFUs).

-

-

Vulkan equivalent: A workgroup in a compute shader runs entirely within one SM/CU.

TPC/GPC (Texture Processing Cluster / Graphics Processing Cluster) / SA (Shader Array)

-

A group of SMs/CUs that may share intermediate caches or specialized hardware.

-

NVIDIA :

-

"Texture Processing Cluster" (TPC) or "Graphics Processing Cluster" (GPC)

-

Groups 2–8 SMs sharing raster/tessellation units.

-

-

AMD :

-

"Shader Array" (SA) in RDNA

-

Uses “Shader Array” or “Workgroup Processor” as a cluster-like grouping.

-

Groups 2 CUs sharing instruction cache/ray accelerators.

-

-

Shared at cluster level: Sometimes texture units, geometry units, or a shared instruction cache.

GPU Die

-

Graphics Engine (e.g., AMD's Shader Engine, NVIDIA's GPC).

-

Contains multiple clusters + fixed-function units (geometry, raster).

GPU

-

All clusters together, sharing the L2 cache and global memory.

Specialized units & instructions

SFUs (special function units)

-

Note that transcendental operations (sin/cos, rsqrt) may be executed on SFUs with different latencies/throughput.

Tensor/Matrix cores, Ray-tracing cores

-

Mention specialized units for matrix multiply/accumulate or ray traversal that change performance characteristics for algorithms that use them.

Asynchronous copies / DMA engines

-

Add async copy mechanisms (device to shared, or staging) that allow overlap of memory transfer with compute, when supported.

Memory

-

.

.

-

-

Off-chip memory:

-

.

.

-

-

Deep Seek:

-

.

.

-

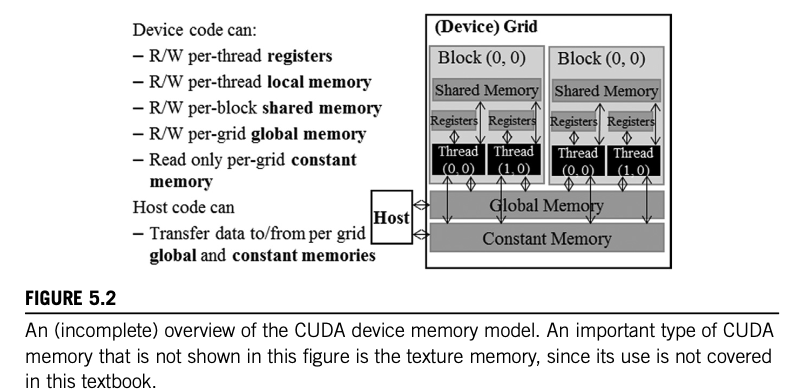

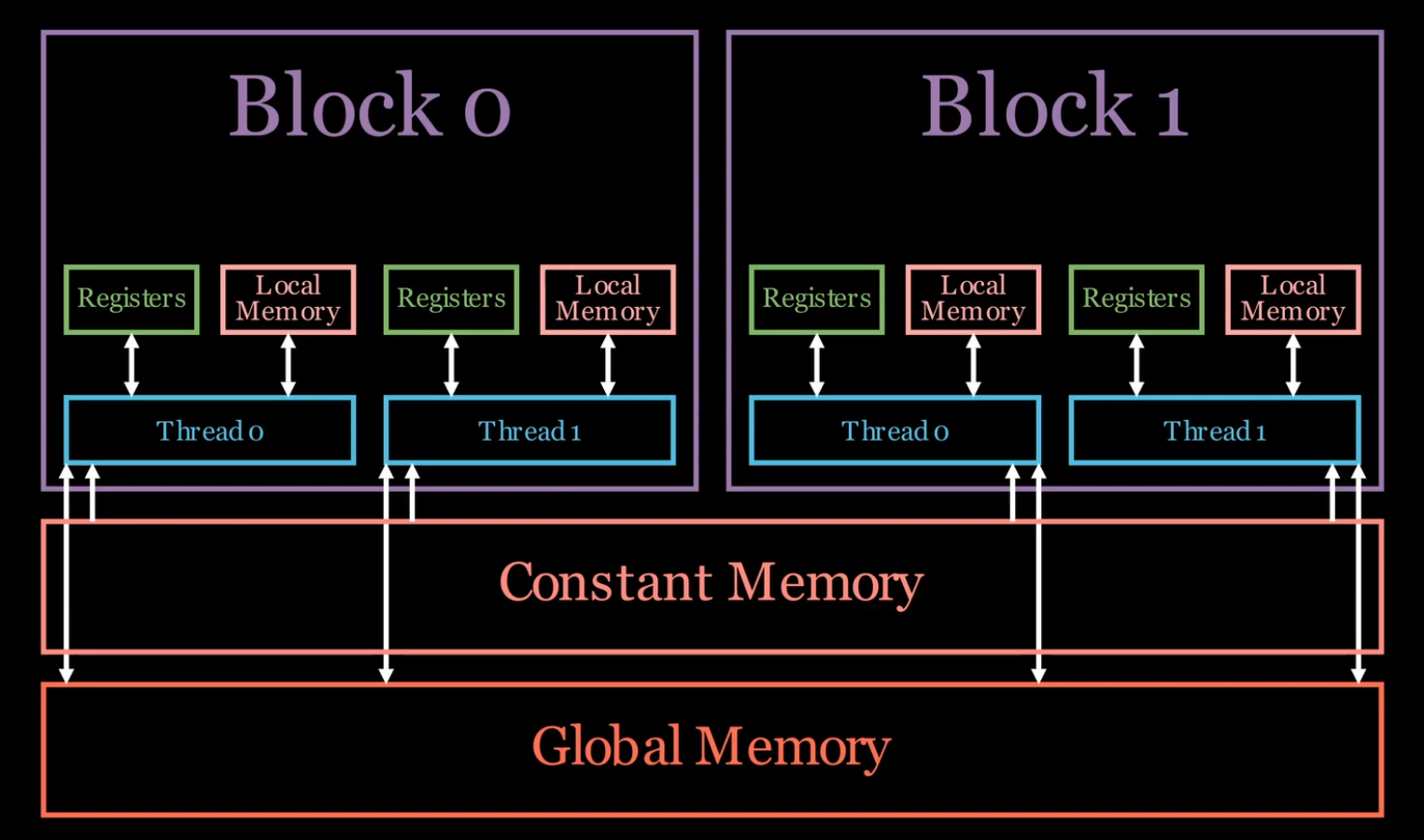

Registers (on-chip, in each shader core)

-

Physical Location :

-

On-chip in each shader core.

-

-

Scope / Visibility :

-

Private to a single thread.

-

-

Registers are the fastest memory components on a GPU, comprising the register file that supplies data directly into the CUDA cores.

-

A kernel function uses registers to store variables private to the thread and accessed frequently.

-

Both registers and shared memory are on-chip memories where variables residing in these memories can be accessed at very high speeds in a parallel manner.

-

By leveraging registers effectively, data reuse can be maximized and performance can be optimized.

-

Per-thread fast storage, not addressable from shaders as normal memory.

Shared / "scratch" (on-chip, in each SM/Wave/CU)

-

Physical Location :

-

On-chip in each compute unit (SM/Wave/Compute Unit).

-

-

Scope / Visibility :

-

Shared by threads in a workgroup.

-

-

Low-latency buffer for thread-group cooperation. Useful for explicitly managed caches and reductions.

-

Is accessible to all threads in the same block and lasts for the block’s lifetime.

Texture memory (on-chip, specialized hardware)

-

Physical Location :

-

On-chip, specialized hardware

-

-

Scope / Visibility :

-

Optimized for spatial locality (texture) or broadcast (constant)

-

-

Is another read-only memory type ideal for physically adjacent data access. Its use can mitigate memory traffic and increase performance compared to global memory.

L1 Cache (on chip, per SM/CU)

-

Physical Location :

-

On-chip per-SM/CU.

-

-

Scope / Visibility :

-

Caches global/shared accesses

-

-

L1 or level 1 cache is attached to the processor core directly.

-

It functions as a backup storage area when the amount of active data exceeds the capacity of a SM’s register file.

-

Small, very low-latency caches often configurable (texture vs load/store/shared) on many architectures; behavior and size vary by vendor/generation.

-

L1 may be partitioned between shared memory and cache.

L2 Cache (on-chip, shader across GPU)

-

Physical Location :

-

On-chip, shared across GPU.

-

-

Scope / Visibility :

-

GPU-wide.

-

-

L2 or level 2 cache is larger and often shared across SMs.

-

Unlike the L1 cache(s), there is only one L2 cache.

-

Larger cache shared across multiple SMs/CUs; services DRAM, texture and load/store requests; a common coherence point for the chip.

Local (off-chip, in global memory, per-thread)

-

Physical Location :

-

Off-chip in global memory.

-

“Local” in shader languages is a per-thread address space.

-

Physically it may be kept in registers, spilled to on-chip memory, or spilled to device memory (global DRAM) depending on register pressure and compiler decisions. Saying it is always off-chip is misleading.

-

-

Scope / Visibility :

-

Private to a thread, but lives in DRAM

-

-

Is private to each thread, but it’s slower than register memory.

Constant Cache (?)

-

Constant cache captures frequently used variables for each kernel leading to improved performance.

-

When designing memory systems for massively parallel processors, there will be constant memory variables. Rewriting these variables would be redundant and pointless. Thus, a specialized memory system like the constant cache eliminates the need for computationally costly hardware logic.

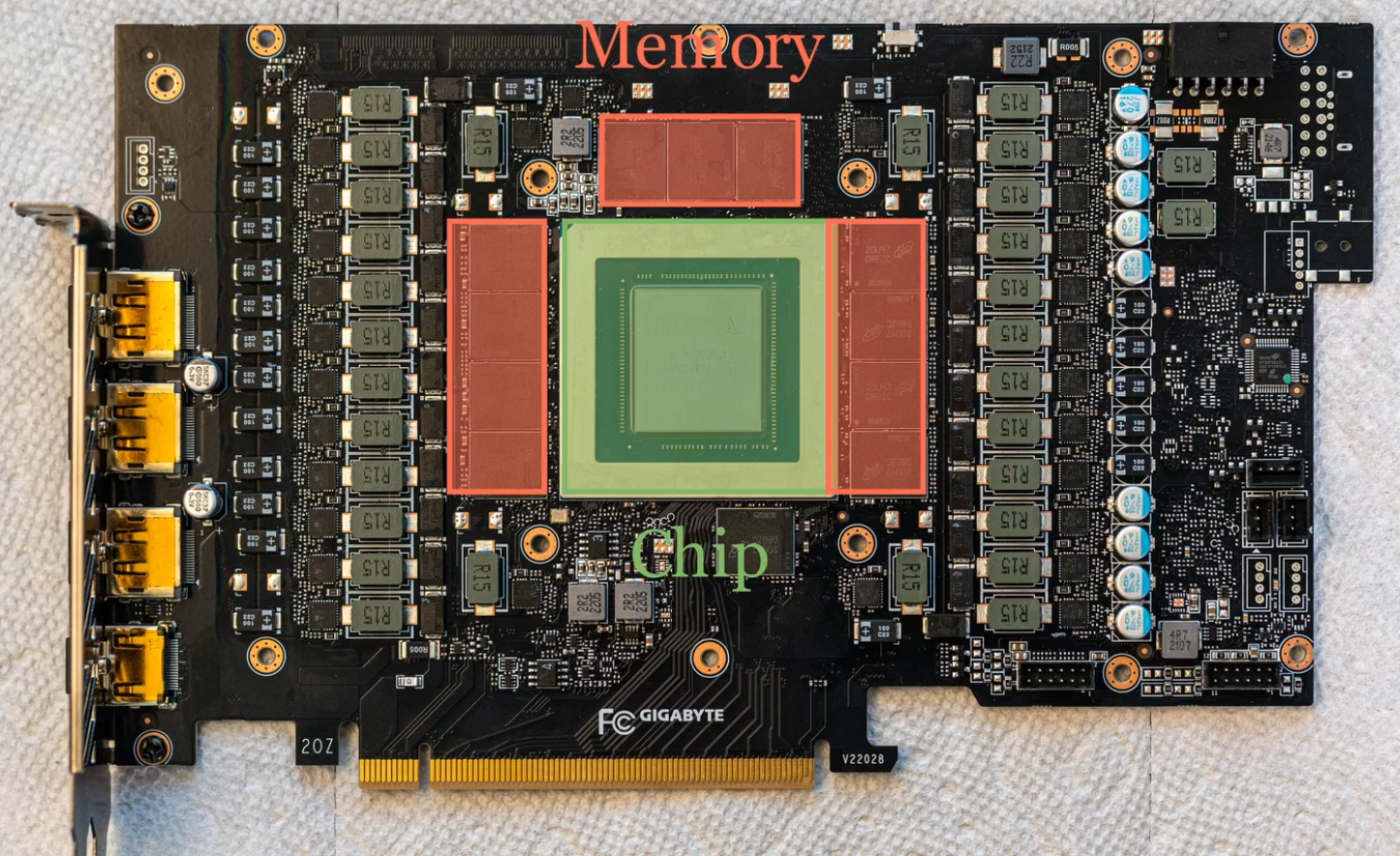

Global Memory (off-chip DRAM, VRAM on discrete GPUs)

-

Physical Location :

-

Off-chip DRAM (VRAM on discrete GPUs, DRAM on integrated GPUs)

-

-

Scope / Visibility :

-

GPU-wide.

-

-

“Global memory” is a logical/architectural term , “VRAM” is a physical/hardware term .

-

On discrete GPUs, this usually maps to VRAM.

-

On integrated GPUs or APUs (where GPU and CPU share DRAM), “global memory” is just system RAM .

-

In that case, there is no separate VRAM, but the hardware still calls it “global” because it’s visible to all threads.

-

-

Holds data that lasts for the duration of the grid/host.

-

All threads and the host have access to global memory.

-

High-bandwidth, higher-latency global memory; large capacity.

-

Some platforms provide unified system/GPU memory where the GPU uses system DRAM.

Host-Visible Physical Memory

-

HOST_VISIBLE-

CPU can map the memory with

vkMapMemoryand read/write it.

-

-

Why not make all VRAM host-visible?

-

Mapping all VRAM to the CPU would waste PCIe BAR address space and could reduce GPU performance by forcing VRAM into a less optimal configuration.

-

Host-visible VRAM is generally slower for the GPU than purely device-local VRAM because of cacheability and controller constraints.

-

Properties

-

HOST_CACHED-

CPU accesses are cached in the CPU’s cache hierarchy (good for reads, but may need explicit flush for writes).

-

-

DEVICE_LOCAL-

Memory is physically close to the GPU (VRAM on discrete GPUs, DRAM on integrated GPUs).

-

Where is it

-

MEMORY_PROPERTY_HOST_VISIBLEonly means the CPU can map that VkDeviceMemory (i.e. vkMapMemory works). It does not guarantee where the bytes physically live. -

To know whether that host-visible memory counts against VRAM or system RAM, check the memory heap associated with the memory type. If the memory type’s heapIndex points to a heap that has

MEMORY_HEAP_DEVICE_LOCAL, that heap is the device-local heap (what you should treat as “VRAM” for accounting). If the heap does not haveDEVICE_LOCAL, it’s system RAM (account against main memory). -

Discrete GPU (most desktop cards)

-

It's on System RAM, not on GPU VRAM.

-

The task manager shows it as System RAM.

-

The CPU owns system RAM → the GPU accesses it over PCIe using DMA.

-

Vulkan marks this memory type as

HOST_VISIBLEbecause the driver can map it into the CPU’s virtual address space (vkMapMemory). -

It is not device-local, so GPU access is slower and higher-latency than VRAM.

-

-

Discrete GPU + resizable BAR (modern systems)

-

Resizable BAR lets the CPU map large VRAM ranges.

-

Physically inside GPU VRAM.

-

Host-visible due to large PCIe BAR aperture.

-

Still slower for CPU than normal RAM but faster than old small BAR windows.

-

This creates memory types that are both host-visible and device-local on a dGPU.

-

-

Discrete GPU with a PCIe BAR that exposes VRAM (old default BAR)

-

Physically still VRAM on the GPU card.

-

The task manager reports it as GPU memory, not System RAM.

-

This situation appears in Vulkan as host-visible + device-local memory types.

-

The driver exposes this region to the CPU, so it becomes host-visible.

-

Often small (MBs–GBs, depending on BAR size and driver config).

-

CPU writes are slow due to PCIe latency, even though the memory is VRAM.

-

-

Integrated GPU / APU

-

There is no VRAM; all GPU memory (both host-visible and device-local) is system RAM.

-

The task manager shows it as System RAM.

-

CPU and GPU share DRAM via a unified memory controller.

-

Vulkan will expose memory types that are both DEVICE_LOCAL and HOST_VISIBLE.

-

GPU access time can vary due to shared bandwidth or caches, but it’s still significantly faster than PCIe-based access.

-

BAR (Base Address Register)

-

Discrete GPU with a PCIe BAR that exposes VRAM” (old/default BAR)

-

Historically:

-

GPUs expose only a small PCIe BAR window (typically 256 MB).

-

The CPU can map only that tiny window of VRAM at once.

-

The driver remaps that window on demand if you try to access different VRAM ranges.

-

Because the window is so small, it is rarely used for general-purpose CPU access.

-

-

So you can map VRAM, but only a tiny slice, and it’s awkward and slow for real work.

-

This is the legacy BAR situation.

-

-

“Discrete GPU + Resizable BAR” (modern systems)

-

Resizable BAR is a PCIe capability that lets the BAR window be expanded to cover the entire VRAM.

-

Instead of 256 MB, the CPU can directly map all VRAM.

-

No remapping tricks.

-

Vulkan can expose device-local memory that is also host-visible for large allocations.

-

Games can stream assets more efficiently because CPU → VRAM access no longer requires the small-window workaround.

-

-

This is the full VRAM mapping situation.

-

Resizable BAR only changes addressability, not the speed of PCIe.

-

GPU performance is unaffected by whether BAR is small or large.

-

By Intel: Resizable BAR (Base Address Register) is a PCIe capability. This is a mechanism that allows the PCIe device, such as a discrete graphics card, to negotiate the BAR size to optimize system resources. Enabling this functionality can result in a performance improvement.

-

-

Discussion:

-

Jesse:

-

BAR_pcie_access_flags := vk.MemoryPropertyFlags{.DEVICE_LOCAL, .HOST_VISIBLE, .HOST_COHERENT}I use that for my UBO buffer -

It's what the BAR is (and where "resizable bar" comes from if you've heard the term)

-

-

Caio:

-

Can it be

DEVICE_LOCALandHOST_VISIBLEat the same time? I assumed it was exclusive. How do you update the buffer?

-

-

Jesse:

-

Yes, within some limits

-

It's what the BAR is (and where "resizable bar" comes from if you've heard the term)

-

I just write through to the pointer

intrinsics.mem_copy_non_overlapping(rawptr(ubo_frame_01_ptr), &per_frame_ubo, size_of(per_frame_ubo)) -

ubo_frame_01_ptrping pongs at an offset determined by the frame index -

Because multiple frames can be in flight at once

-

They need different cameras

-

In practice this means I effectively have 2 UBOs I source from

-

I also have two sets of positions for each mesh

-

BAR is a small segment of CPU-addressable memory on the GPU that is also relatively high-performance read access from the GPU

-

You want that allocation to be very small

-

Because it's not virtualized

-

And if multiple applications want it, they cannot share

-

-

Lee Michael:

-

I had not heard of this! My last Vulkan app actually just assumed ReBAR, and I didn't imagine that would be a downside.

-

-

Jesse:

-

Yeah, at least in Vulkan you can try to allocate upfront and if it fails, just use the ordinary fallback

-

So at least it won't crash in the middle of the application runtime

-

-

-

Enabling, by Intel :

-

Use your system’s latest motherboard firmware supporting Resizable BAR.

-

Enter the system’s BIOS/UEFI firmware configuration menu by pressing the DEL key during system start up. This key may vary between each system manufacturer, please check with your system manufacturer for specific instructions as necessary.

-

Compatibility Support Module (CSM) or Legacy Mode must be disabled and UEFI boot mode must be Enabled.

-

Ensure the following settings are set to Enabled (or Auto if the Enabled option is not present):

-

Above 4G Decoding

-

Re-Size BAR Support

-

-

Use the Intel® Driver and Support Assistant (Intel DSA) to confirm that Resizable BAR is enabled on your system.

-

Host-Coherent

-

Host-coherent memory means CPU and GPU share the same coherent view of the data — no explicit flush/invalidate needed.

-

Often backed by system RAM (on discrete GPUs, that’s typical for PCIe-mapped system memory).

-

On integrated GPUs, system DRAM is naturally coherent if the architecture supports it.

-

-

Non-coherent host-visible memory means CPU and GPU caches aren’t kept in sync automatically.

-

Requires manual flush/invalidate via Vulkan commands.

-

This often happens when host-visible memory is still on the GPU’s side of the PCIe mapping.

-

-

HOST_COHERENT-

CPU writes/reads are automatically visible to the GPU without

vkFlushMappedMemoryRanges/vkInvalidateMappedMemoryRanges.

-

Cache

Coalescing / contiguous access

-

A coalesced memory transaction is one in which all of the threads in a half-warp access global memory at the same time. The correct way to do it is just have consecutive threads access consecutive memory addresses.

-

GPUs batch many threads (warps/wavefronts).

-

If threads in a group load adjacent addresses, the hardware can merge requests into fewer memory transactions (coalescing).

-

Non-sequential or strided accesses increase transactions and reduce effective bandwidth.

Cache lines and alignment

-

Accesses are serviced in cache-line granularity; unaligned or small scattered loads can cause full-line fetches or multiple lines, increasing bandwidth pressure. Designing buffer layouts for aligned, contiguous reads reduces misses.

Bank conflicts (shared memory)

-

When many threads access the same bank with conflicting addresses, accesses serialize. Layout transforms (padding/transpose) can avoid conflicts.

Texture/texture caches

-

Sampled image access can use specialized caches with different locality assumptions versus raw buffer loads; memory layout (tiling) influences cache efficiency.

GPU VA (Virtual Address)

-

It's a virtual pointer inside the GPU’s virtual address space that the GPU and drivers use to reference device memory.

-

It is not a physical address; the GPU MMU translates it to physical backing memory.

64-bit vs 32-bit VA

-

64-bit : Default for general buffers.

-

32-bit : Used for descriptors (e.g.,

VK_EXT_descriptor_buffersaves memory).

MMU Translation

-

GPU VA → Physical address (VRAM or system RAM) via page tables.

-

Fault Handling : Driver-managed page faults (sparse residency).

Vulkan

-

Feature exposure :

-

Vulkan exposes GPU virtual addresses via a feature/extension set (commonly

VK_KHR_buffer_device_address/ Vulkan 1.2 core feature). An implementation must advertise and the application must enable the corresponding feature(s) at device creation to use GPU addresses.

-

-

Buffer creation and usage bits :

-

To obtain a GPU VA for a buffer, the buffer must be created with the shader/device-address usage bit (e.g.

VK_BUFFER_USAGE_SHADER_DEVICE_ADDRESS_BIT) so the implementation knows the buffer will be addressable.

-

-

Memory binding :

-

The buffer’s memory must be allocated and bound as usual.

-

The GPU VA refers to the buffer object while that memory is bound.

-

-

Querying the address :

-

Vulkan provides an API (e.g.

vkGetBufferDeviceAddresswith aVkBufferDeviceAddressInfo) that returns a 64-bit GPU address for the buffer or memory object. That value is a device-virtual address suitable for use by GPU code or by other Vulkan objects that accept device addresses (acceleration structures, device address based pointer data, etc.).

-

-

Using the address in shaders / GPU code :

-

The address can be passed to shaders (e.g., as a 64-bit integer pushed into a descriptor or push constant) and used by shaders that support buffer-address operations (SPIR-V features / shader capability required). Ray-tracing acceleration structures and some GPU pointer-chasing data structures commonly rely on device addresses.

-

-

Driver/GPU translation and constraints :

-

The GPU VA is translated by the GPU MMU. The value is only valid while the buffer’s memory remains bound and resident. If memory is freed, reallocated, or pages are made non-resident (sparse binding), dereferencing the GPU VA causes undefined behavior or faults.

-

-

VK_EXT_descriptor_buffer:-

On some drivers, descriptor buffers live in a restricted GPU VA space, which allows those drivers to only spend 32-bits instead of 64-bits to bind a descriptor VA.

-

This VA range is precious. On these drivers, you’ll likely see new memory types that allocate 32-bit VA under the hood.

-

Tiled-GPUs

-

.

.